Publishings

Program Areas

-

Press Release

WARNING: POSSIBLE HOSTILE TAKEOVER OF FTC BY “DOGE” TEAM UNDER ELON MUSK — CONSUMER PROTECTIONS AT IMMEDIATE RISK

FOR IMMEDIATE RELEASEApril 4, 2025WARNING: POSSIBLE HOSTILE TAKEOVER OF FTC BY “DOGE” TEAM UNDER ELON MUSK — CONSUMER PROTECTIONS AT IMMEDIATE RISKWASHINGTON, D.C. — Alarming reports suggest that individuals associated with the so-called “DOGE team,” acting under the direction of Elon Musk, may be maneuvering to infiltrate and dismantle the Federal Trade Commission (FTC) from within. Sources indicate that DOGE staffers have already embedded themselves inside the agency, potentially with access to sensitive consumer data and a vast trove of corporate trade secrets housed within internal data systems. This setup may be laying the groundwork for illegal mass firings and a reduction in force—an unprecedented power grab that could cripple the FTC’s ability to protect the American public.While details continue to emerge, the pattern is unmistakable. The DOGE team’s recent actions follow a disturbing playbook: bypassing norms, subverting legal processes, and setting the stage for institutional collapse. And there is at least one clear beneficiary—Elon Musk, whose company X (formerly Twitter) remains under a federal consent decree. A neutered FTC would eliminate one of the final safeguards standing between Musk and unchecked surveillance, exploitation, and algorithmic manipulation of users—including children.The very foundation of federal consumer protection is in danger.The FTC plays an irreplaceable role in:● Shielding seniors and veterans from fraud● Defending children’s safety and privacy online● Blocking deceptive advertising and marketing practices● Enforcing limits on monopolistic behavior and AI-driven risks Without a fully functioning FTC, corporations would face fewer consequences, and consumers would be left vulnerable to lawless business practices. This would open the floodgates to a “race to the bottom” economy—one where exploitation, manipulation, and impunity replace fair play, innovation, and trust. The consequences would be dire—especially for children, vulnerable populations, and small businesses.We urge Chair Ferguson and Commissioner Holyoak to act now. Stand firm against the DOGE team’s attempted subversion. Uphold your oaths to the institution—and to the Constitution.The American public deserves a functioning watchdog—not a hollowed-out institution hijacked by billionaires seeking to rewrite the rules in their favor. ***The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of the Children's Online Privacy Protection Act over 25 years ago in 1998. -

Press Release

CDD Joins Twenty-Four Other Consumer Protection and Digital Rights Groups in Condemning the Illegal Firing of Two Democratic FTC Commissioners

Groups are urging lawmakers to launch a probe into the recent firings and to block the confirmation of President Donald Trump’s FTC nominee until the fired commissioners get their jobs back.Read full letter attached. -

Press Release

In Firing The Two Democratic FTC Commissioners, Pres. Trump Places Every American Consumer At Grave Risk

Statement of Jeff Chester, executive director, Center for Digital DemocracyMarch 19, 2025 In a country where illegality is quickly replacing the rule of law, the action yesterday by Pres. Trump ending the decades of bi-partisan operations at the Federal Trade Commission (FTC) may seem insignificant. But for anyone concerned about lower grocery prices, protecting privacy online, consumer protections for your money when buying goods and services, or whether children and teens can use the Internet without mass surveillance and manipulation, they should be very, very worried. Why did Pres. Trump do this now? It’s because the U.S. is on the verge of a transformation of how we shop, buy, view and interact with businesses online and offline—due to the confluence of Generative Artificial Intelligence, expansion of pervasive data gathering and personalized profiling, and the deepening of alliances and partnerships across Big Tech, Big Data, retailers, supermarkets, hospitals, databrokers, streaming TV networks and many others. Trump’s allies—especially Amazon, Google, Meta/Facebook, and TikTok—do not want any safeguards, oversight or accountability—especially at this time when immense profits are to be made for themselves. The Trump Administration is rewarding the very same billionaires and Tech Giants who are responsible for raising the prices we pay for groceries, obliterating our data privacy, and making it unsafe for kids to go on social media. Consumer advocates won’t be silent here, of course, and we will document and sound the alarm as Americans are increasingly victimized by Big Tech and large supermarket chains. The FTC has been transformed into a Trump Administration and special interest lapdog—instead of the federal watchdog guarding the interests and welfare of the public. Americans who struggle to make ends meet—whether they need help securing refunds, recovering from scams, or ensuring fair treatment—will be especially harmed. But let’s be clear. The action of the Trump Administration to remove the FTC as a serious consumer protection agency has helped make every American further unsafe. --30-- -

Press Release

Statement on the Reintroduction of the Children and Teens’ Online Privacy Protection Act (COPPA 2.0) by Senators Markey and Cassidy

March 4, 2025Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.orgStatement on the Reintroduction of the Children and Teens’ Online Privacy Protection Act (COPPA 2.0) by Senators Markey and Cassidy. The following statement is attributed to Katharina Kopp, Ph.D., Deputy Director of the Center for Digital Democracy:“The Children and Teens’ Online Privacy Protection Act, reintroduced by Senators Markey and Cassidy and other Senate co-sponsors, is more urgent than ever. Children’s surveillance has only intensified across social media, gaming, and virtual spaces, where companies harvest data to track, profile, and manipulate young users. COPPA 2.0 will ban targeted ads to those under 16, curbing the exploitation, manipulation, and discrimination of children for profit. By extending protections to teens and requiring a simple ‘eraser button’ to delete personal data, this legislation takes a critical step in restoring privacy rights in an increasingly invasive digital world,” said Katharina Kopp, Deputy Director of the Center for Digital Democracy. See also the full statement from Senators Markey and Cassidy here. -

Regulating Digital Food and Beverage Marketing in the Artificial Intelligence & Surveillance Advertising Era Ultra-processed food companies and their retail, online-platform, quick-service-restaurant, media-network and advertising-technology (adtech) partners are expanding their targeting operations to push the consumption of unhealthy foods and beverages. A powerful array of personalized, data-driven and AI-generated digital food marketing is pervasive online, and also designed to influence us offline as well (such as when we are at convenience or grocery stores). CDD has a number of reports that reveal the extent of this development, including an analysis of the market, ways to research, and where policies and safeguards have been enacted. Unfortunately, there isn’t a single remedy to address such unhealthy marketing. Individuals and families can only do so much to reduce the effects of today’s pervasive tracking and targeting of people and communities via mobile phones, social media, and “smart” TVs. What’s required now is a coordinated set of policies and regulations to govern the ways ultra processed food companies and their allies conduct online advertising and data collection, especially when public health is involved. Such an effort, moreover, must be broad-based, addressing a variety of sectors, such as privacy, consumer protection, and antitrust. Formulating and advancing these policies will be an enormous challenge, but it is one that we cannot afford to ignore. CDD is working to address all of these issues and more. We closely follow the digital marketplace, especially from the food, beverage, retail and online-platform industries. We track, analyze and call attention to harmful industry practices, and are helping to build a stronger global movement of advocates dedicated to protecting all of us from this unfair and currently out-of-control system. We are happy to work with you to ensure everyone—in the U.S. and worldwide—can live healthier lives without being constantly exposed to fast-food and other harmful marketing designed to increase corporate bottom lines without regard to the human and environmental consequences.

-

Press Release

Statement on the Federal Trade Commission’s Amendments to the Children’s Online Privacy Protection Rule

January 16, 2025Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.org Statement on the Federal Trade Commission’s Amendments to the Children’s Online Privacy Protection Rule The following statement is attributed to Katharina Kopp, Ph.D., Deputy Director of the Center for Digital Democracy:As digital media becomes increasingly embedded in children’s lives, it more aggressively surveils, manipulates, discriminates, exploits, and harms them. Families and caregivers know all too well the impossible task of keeping children safe online. Strong privacy protections are critical to ensuring their well-being and safety. The Federal Trade Commission’s (FTC) finalized amendments to the Children’s Online Privacy Protection Rule (COPPA Rule) are a crucial step forward, enhancing safeguards and shifting the responsibility for privacy protections from parents to service providers. Key updates include:Restrictions on hyper-personalized data collection for targeted advertising:Mandating separate parental consent for disclosing personal information to third parties.Prohibiting the conditioning of service access on such consent.Limits on data retention:Imposing stricter data retention limits.Baseline and default privacy protections:Strengthening purpose specification and disclosure requirements.Enhanced data security requirements:Requiring robust information security programs.We commend the FTC, under Chair Lina Khan’s leadership, for finalizing this much-needed update to the COPPA Rule. The last revision, in 2013, was over a decade ago. Since then, the digital landscape has been radically transformed by practices such as mass data collection, AI-driven systems, cloud-based interconnected platforms, sentiment and facial analytics, cross-platform tracking, and manipulative, addictive design practices. These largely unregulated, Big Tech and investor driven transformations have created a hyper-surveillance environment that is especially harmful and toxic to children and teens.The data-driven, targeted advertising business model continues to pose daily threats to the health, safety, and privacy of children and their families. The FTC’s updated rule is a small but significant step toward addressing these risks, curbing harmful practices by Big Tech, and strengthening privacy protections for America’s youth online.To ensure comprehensive safeguards for children and teens in the digital world, it is essential that the incoming FTC leadership enforces the updated COPPA Rule vigorously and without delay. Additionally, it is imperative that Congress enacts further privacy protections and establishes prohibitions against harmful applications of AI technologies. * * *In 2024, a coalition of eleven leading health, privacy, consumer protection, and child rights groups filed comments at the Federal Trade Commission (FTC) offering a digital roadmap for stronger safeguards while also supporting many of the agency’s key proposals for updating its regulations implementing the bipartisan Children’s Online Privacy Protection Act (COPPA). Comments were submitted by Fairplay, the Center for Digital Democracy, the American Academy of Pediatrics, and other advocacy groups. The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago in 1998. -

Press Release

Streaming Television Industry Conducting Vast Surveillance of Viewers, Targeting Them with Manipulative AI-driven Ad Tactics, Says New Report

Digital Privacy and Consumer Protection Group Calls on FTC, FCC and California Regulators to Investigate Connected TV Practices

Streaming Television Industry Conducting Vast Surveillance of Viewers, Targeting Them with Manipulative AI-driven Ad Tactics, Says New Report.Digital Privacy and Consumer Protection Group Calls on FTC, FCC and California Regulators to Investigate Connected TV PracticesContact: Jeff Chester, 202-494-7100 Jeff@democraticmedia.orgOctober 7, 2024Washington, DC. The Connected TV (CTV) video streaming industry in the U.S. operates a massive data-driven surveillance apparatus that has transformed the television set into a sophisticated monitoring, tracking and targeting device, according to a new report from the Center for Digital Democracy (CDD). How TV Watches Us: Commercial Surveillance in the Streaming Era documents how CTV captures and harvests information on individuals and families through a sophisticated and expansive commercial surveillance system, deliberately incorporating many of the data-gathering, monitoring, and targeting practices that have long undermined privacy and consumer protection online.The report highlights a number of recent trends that are key to understanding today’s connected TV operations:Leading streaming video programming networks, CTV device companies and “smart” TV manufacturers, allied with many of the country’s most powerful data brokers, are creating extensive digital dossiers on viewers based on a person’s identity information, viewing choices, purchasing patterns, and thousands of online and offline behaviors.So-called FAST channels (Free Advertiser-Supported TV)—such as Tubi, Pluto TV, and many others—are now ubiquitous on CTV, and a key part of the industry’s strategy to monetize viewer data and target them with sophisticated new forms of interactive marketing.Comcast/NBCU, Disney, Amazon, Roku, LG and other CTV companies operate cutting-edge advertising technologies that gather, analyze and then target consumers with ads, delivering them to households in milliseconds. CTV has unleashed a powerful arsenal of interactive advertising techniques, including virtual product placement inserted into programming and altered in real time. Generative AI enables marketers to produce thousands of instantaneous “hypertargeted variations” personalized for individual viewers. Surveillance has been built directly into television sets, with major manufacturers’ “smart TVs” deploying automatic content recognition (ACR) and other monitoring software to capture “an extensive, highly granular, and intimate amount of information that, when combined with contemporary identity technologies, enables tracking and ad targeting at the individual viewer level,” the report explains.Connected television is now integrated with online shopping services and offline retail outlets, creating a seamless commercial and entertainment culture through a number of techniques, including what the industry calls “shoppable ad formats” incorporated into programming and designed to prompt viewers to “purchase their favorite items without disrupting their viewing experience,” according to industry materials.The report profiles major players in the connected TV industry, along with the wide range of technologies they use to monitor and target viewers. For example:Comcast’s NBCUniversal division has developed its own data-driven ad-targeting system called “One Platform Total Audience.” It powers NBCU’s “streaming activation” of consumers targeted across “300 end points,” including their streaming video programming and mobile phone use. Advertisers can use the “machine learning and predictive analytics” capabilities of One Platform, including its “vast… first-party identity spine” that can be coupled with their own data sets “to better reach the consumers who matter most to brands.” NBCU’s “Identity graph houses more than 200 million individuals 18+, more than 90 million households, and more than 3,000 behavioral attributes” that can be accessed for strategic audience targeting.”The Walt Disney Company has developed a state-of the-art big-data and advertising system for its video operations, including through Disney+ and its “kids” content. Its materials promise to “leverage streaming behavior to build brand affinity and reward viewers” using tools such as the “Disney Audience Graph—consisting of millions of households, CTV and digital device IDs… continually refined and enhanced based on the numerous ways Disney connects with consumers daily.” The company claims that its ID Graph incorporates 110 million households and 260 million device IDs that can be targeted for advertising using “proprietary” and “precision” advertising categories “built from 100,000 [data] attributes.”Set manufacturer Samsung TV promises advertisers a wealth of data to reach their targets, deploying a variety of surveillance tools, including an ACR technology system that “identifies what viewers are watching on their TV on a regular basis,” and gathers data from a spectrum of channels, including “Linear TV, Linear Ads, Video Games, and Video on Demand.” It can also determine which viewers are watching television in English, Spanish, or other languages, and the specific kinds of devices that are connected to the set in each home.“The transformation of television in the digital era has taken place over the last several years largely under the radar of policymakers and the public, even as concerns about internet privacy and social media have received extensive media coverage,” the report explains. “The U.S. CTV streaming business has deliberately incorporated many of the data-surveillance marketing practices that have long undermined privacy and consumer protection in the ‘older’ online world of social media, search engines, mobile phones and video services such as YouTube.” The industry’s self-regulatory regimes are highly inadequate, the report authors argue. “Millions of Americans are being forced to accept unfair terms in order to access video programming, which threatens their privacy and may also narrow what information they access—including the quality of the content itself. Only those who can afford to pay are able to ‘opt out’ of seeing most of the ads—although much of their data will still be gathered.”The massive surveillance and targeting practices of today’s contemporary connected TV industry raise a number of concerns, the report explains. For example, during this election year, CTV has become the fastest growing medium for political ads. “Political campaigns are taking advantage of the full spectrum of ad-tech, identity, data analysis, monitoring and tracking tools deployed by major brands.” While these tools are no doubt a boon to campaigns, they also make it easy for candidates and other political actors to “run covert personalized campaigns, integrating detailed information about viewing behaviors, along with a host of additional (and often sensitive) data about a voter’s political orientations, personal interests, purchasing patterns, and emotional states. With no transparency or oversight,” the authors warn, “these practices could unleash millions of personalized, manipulative and highly targeted political ads, spread disinformation, and further exacerbate the political polarization that threatens a healthy democratic culture in the U.S.”“CTV has become a privacy nightmare for viewers,” explained report co-author Jeff Chester, who is the executive director of CDD. “It is now a core asset for the vast system of digital surveillance that shapes most of our online experiences. Not only does CTV operate in ways that are unfair to consumers, it is also putting them and their families at risk as it gathers and uses sensitive data about health, children, race and political interests,” Chester noted. “Regulation is urgently needed to protect the public from constantly expanding and unfair data collection and marketing practices,” he said, “as well as to ensure a competitive, diverse and equitable marketplace for programmers.”“Policy makers, scholars, and advocates need to pay close attention to the changes taking place in today’s 21st century television industry,” argued report co-author Kathryn C. Montgomery, Ph.D. “In addition to calling for strong consumer and privacy safeguards,” she urged, “we should seize this opportunity to re-envision the power and potential of the television medium and to create a policy framework for connected TV that will enable it to do more than serve the needs of advertisers. Our future television system in the United States should support and sustain a healthy news and information sector, promote civic engagement, and enable a diversity of creative expression to flourish.”CDD is submitting letters today to the chairs of the FTC and FCC, as well as the California Attorney General and the California Privacy Protection Agency, calling on policymakers to address the report’s findings and implement effective regulations for the CTV industry.CDD’s mission is to ensure that digital technologies serve and strengthen democratic values, institutions and processes. CDD strives to safeguard privacy and civil and human rights, as well as to advance equity, fairness, and community --30-- -

Press Release

Statement Regarding the FTC 6(b) Study on Data Practices of Social Media and Video Streaming Services

“A Look Behind the Screens Examining the Data Practices of Social Media and Video Streaming Services”

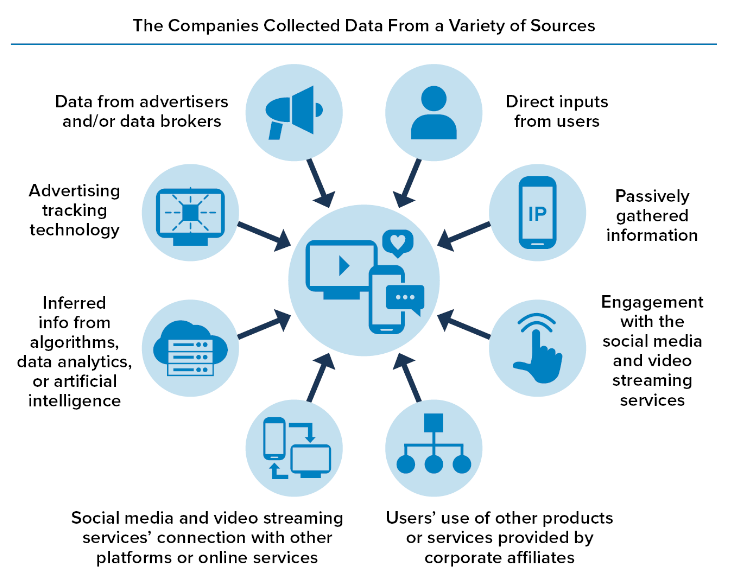

Center for Digital DemocracyWashington, DCContact: Katharina Kopp, kkopp@democraticmedia.org Statement Regarding the FTC 6(b) Study on Data Practices of Social Media and Video Streaming Services -“A Look Behind the Screens Examining the Data Practices of Social Media and Video Streaming Services”The following statement can be attributed to Katharina Kopp, Ph.D., Deputy Director,Center for Digital Democracy:The Center for Digital Democracy welcomes the release of the FTC’s 6(b) study on social media and video streaming providers’ data practices and it evidence-based recommendations.In 2019, Fairplay (then the Campaign for a Commercial-Free Childhood (CCFC)), the Center for Digital Democracy (CDD), and 27 other organizations, and their attorneys at Georgetown Law’s Institute for Public Representation urged the Commission to use its 6(b) authority to better understand how tech companies collect and use data from children.The report’s findings show that social media and video streaming providers’ s business model produces an insatiable hunger for data about people. These companies create a vast surveillance apparatus sweeping up personal data and creating an inescapable matrix of AI applications. These data practices lead to numerous well-documented harms, particularly for children and teens. These harms include manipulation and exploitation, loss of autonomy, discrimination, hate speech and disinformation, the undermining of democratic institutions, and most importantly, the pervasive mental health crisis among the youth.The FTC's call for comprehensive privacy legislation is crucial in curbing the harmful business model of Big Tech. We support the FTC’s recommendation to better protect teens but call, specifically, for a ban on targeted advertising to do so. We strongly agree with the FTC that companies should be prohibited from exploiting young people's personal information, weaponizing AI and algorithms against them, and using their data to foster addiction to streaming videos.That is why we urge this Congress to pass COPPA 2.0 and KOSA which will compel Big Tech companies to acknowledge the presence of children and teenagers on their platforms and uphold accountability. The responsibility for rectifying the flaws in their data-driven business model rests with Big Tech, and we express our appreciation to the FTC for highlighting this important fact. ________________The Center for Digital Democracy is a public interest research and advocacy organization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago in 1998. -

Press Release

Statement Regarding the U.S. Senate Vote to Advance the Kids Online Safety and Privacy Act (S. 2073)

Center for Digital Democracy Washington, DC July 30, 2024 Contact: Katharina Kopp, kkopp@democraticmedia.org Statement Regarding the U.S. Senate Vote to Advance the Kids Online Safety and Privacy Act (S. 2073). The following statement can be attributed to Katharina Kopp, Ph.D., Deputy Director,Center for Digital Democracy: Today is a milestone in the decades long effort to protect America’s young people from the harmful impacts caused by the out-of-control and unregulated social and digital media business model. The Kids Online Safety and Privacy Act (KOSPA), if passed by the House and enacted into law, will safeguard children and teens from digital marketers who manipulate and employ unfair data-driven marketing tactics to closely surveil, profile, and target young people. These include an array of ever-expanding tactics that are discriminatory and unfair. The new law would protect children and teens being exposed to addictive algorithms and other harmful practices across online platforms, protecting the mental health and well-being of youth and their families. Building on the foundation of the 25-year-old Children's Online Privacy Protection Act (COPPA), KOSPA will provide protections to teens under 17. It will prohibit targeted advertising to children and teens, impose data minimization requirements, and compel companies to acknowledge the presence of young online users on their platforms and apps. The safety by design provisions will necessitate the disabling of addictive product features and the option for users to opt out of addictive algorithmic recommender systems. Companies will be obliged to prevent and mitigate harms to children, such as bullying and violence, promotion of suicide, eating disorders, and sexual exploitation. Crucially, most of these safeguards will be automatically implemented, relieving children, teens, and their families of the burden to take further action. The responsibility to rectify the worst aspects of their data-driven business model will lie squarely with Big Tech, as it should have all along. We express our gratitude for the leadership and support of Majority Leader Schumer, Chairwoman Cantwell, Ranking Member Cruz, and Senators Blumenthal, Blackburn, Markey, Cassidy, along with their staff, for making this historic moment possible in safeguarding children and teens online. Above all, we are thankful to all the parents and families who have tirelessly advocated for common-sense safeguards. We now urge the House of Representatives to act upon their return from the August recess. The time for hesitation is over. About the Center for Digital Democracy (CDD)The Center for Digital Democracy is a public interest research and advocacyorganization, established in 2001, which works on behalf of citizens, consumers, communities, and youth to protect and expand privacy, digital rights, and data justice. CDD’s predecessor, the Center for Media Education, lead the campaign for the passage of COPPA over 25 years ago, in 1998. -

Press Release

Statement from children’s advocacy organizations regarding the House Energy and Commerce Committee Subcommittee on Innovation, Data and Commerce May 23 markup of legislation addressing kids online safety and privacy

"Children and Teens Need Effective Privacy Protections Now"“We have two reactions to the legislation the House Energy and Commerce Subcommittee on Innovation plans to consider on Thursday. On the one hand, we appreciate that the subcommittee is taking up the Kids Online Safety Act (KOSA) as a standalone bill, as we had recommended. Congressional action to make the internet safer for kids and teens is long overdue, so we welcome this step tomorrow. As the Members know, KOSA has 69 co-sponsors in the Senate, has bi-partisan support in both houses, and has overwhelming popular support across the country as demonstrated by numerous public polls and in the strong advocacy actions in the Capitol and elsewhere. “On the other hand, we are very disappointed in the privacy legislation the subcommittee will take up as it relates to children and teens. We have always maintained, and the facts are clear on this, that stronger data privacy protections for kids and teens should go hand in hand with new social media platform guardrails. The bi-partisan COPPA 2.0 (H.R. 7890), to update the 25- year-old children's privacy law, is not being considered as a standalone bill on Thursday. And further, we are very disappointed with the revised text of the American Privacy Rights Act (APRA) discussion draft as it relates to children and teen privacy. “When the APRA discussion draft was first unveiled on April 7th, we understood Committee leadership to say that the bill would need stronger youth privacy protections. More than a month later, there are no new effective protections for youth in the new draft that was released at 10:00 pm last night, compared with the original discussion draft from April. The new APRA discussion draft purports to include COPPA 2.0 (as Title II) but does not live up to the standalone COPPA 2.0 legislation introduced in the House last month, and supported on a bipartisan, bicameral basis, in terms of protections for children and teens. Furthermore, the youth provisions in APRA’s discussion draft appear impossible to implement without additional clarifications. Lastly, in addition to these highly significant shortcomings, we are also concerned that Title II of the new APRA draft may actually weaken current privacy protections for children, by limiting coverage of sites and services. “Children and teens need effective privacy protections now. We expect the Committee to do better than this, and we look forward to working with them to make sure that once and for all Congress puts kids and teens first when it comes to privacy and online safety.” Signed: Center for Digital Democracy, Common Sense Media, and Fairplay -

Blog

CDD Urges the House to Promptly Markup HR 7890 - the Children and Teens' Online Privacy Protection Act (COPPA 2.0), HR 7890

COPPA 2.0 provides a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization and a prohibition on targeted advertising – it’s not “just” about “notice and consent”

COPPA 2.0 provides a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization and a prohibition on targeted advertising – it’s not “just” about “notice and consent”We commend Rep. Walberg (R-Mich.) and Rep. Castor (D-FL) for introducing the House COPPA 2.0 companion bill. The bill enjoys strong bipartisan support in the U.S. Senate. We urge the House to promptly markup HR 7890 and pass it into law. Any delay in bringing HR 7890 to a vote would expose children, adolescents, and their families to greater harm.Learn more about the Children and Teens’ Online Privacy Protection Act here: Background:The United States is currently facing a commercial surveillance crisis. Digital giants invade our private lives, spy on our families, deploy manipulative and unfair data-driven marketing practices, and exploit our most personal information for profit. These reckless practices are leading to unacceptable invasions of privacy, discrimination, and public health harms.In particular, the United States faces a youth mental health crisis fueled, in part, by Big Tech. According to the American Academy of Pediatrics, children’s mental health has reached a national emergency status. The Center for Disease Control found that in 2021, one in three high school girls contemplated suicide, one in ten high school girls attempted suicide, and among LGBTQ+ youth, more than one in five attempted suicide. As the Surgeon General concluded in a report last year, “there are ample indicators that social media can also have a profound risk of harm to the mental health and well-being of children and adolescents.”Platforms’ data practices and their prioritization of profit over the well-being of America’s youth significantly contribute to the crisis. The lack of privacy protections for children and teens has led to a decline in young people’s well-being. Platforms rely on vast amounts of data to create detailed profiles of young individuals to target them with tailored ads. To achieve this, addictive design features are employed, keeping young users online for longer periods of time and exacerbating the youth mental health crisis. The formula is simple: more addiction equals more data and more targeted ads which translates into greater profits for Big Tech. In fact, according to a recent Harvard study, in 2022, the major Big Tech platforms earned nearly $11 billion in ad revenue from U.S. users under age 17.The Children and Teens’ Online Privacy Protection Act (COPPA 2.0) modernizes and strengthens the only online privacy law for children, the Children’s Online Privacy Protection Act (COPPA). COPPA was passed over 25 years ago and is crucially in need of an update, including the extension of safeguards to teens. Passed by the Senate out of Committee, Reps. Tim Walberg (R-MI) and Kathy Castor (D-FL) introduced COPPA 2.0 in the House in April. It’s time now for the House to act. The Children and Teens’ Online Privacy Protection Act would:- Build on COPPA and extend privacy safeguards to users who are 13 to 16 years of age;- Require strong data minimization safeguards prohibiting the excessive collection, use, and sharing of children’s and teens’ data; COPPA 2.0 would:Prohibit the collection, use or disclosure or maintenance of personal information for purposes of targeted advertising;Prohibit the collection of personal information except when the collection is consistent with the context and necessary to fulfill a transaction or service or provide a product or service requested;Prohibit the retention of personal information for longer than is reasonably necessary to fulfill a transaction or provide a service;Prohibit conditioning a child’s or teen’s participation on the child’s or teen’s disclosing more personal information than is reasonably necessary.Except for certain internal permissible operations purposes of the operator, all other data collection or disclosures require parental or teen consent.- Ban targeted advertising to children and teens;- Provide for parental or teen controls;It would provide for the right to correct or delete personal information collected from a child or teen or content or information submitted by a child or teen to a website – when technologically feasible.- Revise COPPA’s “actual knowledge” standard to close the loophole that allows covered platforms to ignore kids and teens on their site. But what about….?…Notice and Consent, doesn’t COPPA 2.0 rely on the so-called “notice and consent” approach and isn’t the consensus that this is an ineffective way to protect privacy online?No, COPPA 2.0 does not rely on “notice and consent”. It would provide a comprehensive approach to safeguarding children’s and teen’s privacy via data minimization. It appropriately restricts companies’ ability to collect, use, and share personal information of children and teens by default. The consent mechanism is just one additional safeguard. 1. By default COPPA 2.0 would prohibitthe collection, use or disclosure or maintenance of personal information for purposes of targeted advertising to children and teens - this is a flat out ban, consent is not required.the collection of personal information except when the collection is consistent with the context of the relationship and necessary to fulfill a transaction or service or provide a product or service requested of the relationship of the child/teen with the operator.the retention of personal information for longer than is reasonably necessary to fulfill a transaction or provide a service. 2. By extending COPPA protections to teens, COPPA 2.0 would further limit data collection from children and teens as COPPA 2.0 would prohibitconditioning a child’s or teen’s participation in a game, the offering of a prize, or another activity on the child’s or teen’s disclosing more personal information than is reasonably necessary to participate in such activity. 3. Any other personal information that companies would want to collect, use, or disclose requires parental consent or the consent of a teen, except for certain internal permissible operations purposes of the operator. COPPA 2.0's data minimization provisions prevent the collection, use, disclosure, and retention of excessive data from the start. By not collecting or retaining unnecessary data, harmful, manipulative, and exploitative business practices will be prevented. The consent provision is important but plays a relatively small role in the privacy safeguards of COPPA 2.0. But what about….?…targeted advertising, don’t we need a clear ban of targeted advertising to children and teens?Yes, and COPPA 2.0 bans targeted advertising while allowing for contextual advertising.Under COPPA 2.0 it is unlawful for an operator "to collect, use, disclose to third parties, or maintain personal information of a child or teen for purposes of individual-specific advertising to children or teens (or to allow another person to collect, use, disclose, or maintain such information for such purpose).” But what about….?…the transfer of personal information to third parties?Yes, COPPA 2.0 requires verifiable consent by a parent or teen for the disclosure or transfer of personal information to a third party, except for certain internal permissible operations purposes of the operator. Verifiable consent must be obtained before collection, use, and disclosure of personal information via direct notice of the personal information collection, use, and disclosure practices of the operator. Any material changes from the original purposes also require verifiable consent.(Note that the existing COPPA rule requires that an operator gives a parent the option to consent to the collection and use of the child's personal information without consenting to the disclosure of his or her personal information to third parties under 312.5(a)(2). The FTC recently proposed to bolster this provision under the COPPA rule update.) -

Press Release

Statement Regarding House Energy & Commerce Hearing : “Legislative Solutions to Protect Kids Online and Ensure Americans’ Data Privacy Rights”

Center for Digital Democracy April 17, 2024 Washington, DC Contact: Katharina Kopp, kkopp@democraticmedia.orgStatement Regarding House Energy & Commerce Hearing : “Legislative Solutions to Protect Kids Online and Ensure Americans’ Data Privacy Rights” The following statement can be attributed to Katharina Kopp, Ph.D., Deputy Director, Center for Digital Democracy:The Center for Digital Democracy (CDD) welcomes the bi-cameral and bi-partisan effort to come together and produce the American Privacy Rights Act (APRA) discussion draft. We have long advocated for comprehensive privacy legislation that would protect everyone’s privacy rights and provide default safeguards.The United States confronts a commercial surveillance crisis, where digital giants invade our private lives, spy on our families, and exploit our most personal information for profit. Through a vast, opaque system of algorithms, we are manipulated, profiled, and sorted into winners and losers based on data about our health, finances, location, gender, race, and other personal information. The impacts of this commercial surveillance system are especially harmful for marginalized communities, fostering discrimination and inequities in employment, government services, health and healthcare, education, and other life necessities. The absence of a U.S. privacy law not only jeopardizes our individual autonomy but also our democracy.However, our reading the APRA draft, we have several questions and concerns, suggesting that the document needs substantial revision. While the legislation addresses many of our requirements for comprehensive privacy legislation, we oppose various provisions in their current form, including- Insufficient limitations on targeted advertising and de-facto sharing of consumer data: The current data-driven targeted ad supported business model is the key driver of commercial exploitation, manipulation, and discrimination. APRA, however, allows the continuation and proliferation of “first party” targeted advertising without any recourse for individuals. Most of the targeted advertising today relies on first party advertising and widely accepted de-facto sharing practices like “data clean rooms.” APRA should not provide any carve-out for first-party targeted advertising. - Overbroad preemption language: APRA’s preemption of state privacy laws prevents states from implementing stronger privacy protections. Considering that it took the U.S. three decades to pass any comprehensive privacy legislation since the establishment of pervasive digital marketing practices, it would be short-sighted and reckless to believe that the current form of APRA can adequately protect online privacy in the long run without pressure from states to innovate. Technology and data practices are rapidly evolving, and our understanding of their harms are evolving as well. The preemption language is particularly careless, especially since there are almost no provisions giving the FTC the ability to update APRA through effective rulemaking. CDD strongly supports the Children and Teens' Online Privacy Protection Act (COPPA 2.0), HR 7890. Children and teens require additional privacy safeguards beyond those outlined in APRA. Digital marketers are increasingly employing manipulative and unfair data-driven marketing tactics to profile, target, discriminate against, and exploit children and teens on all the online platforms they use. This is leading to unacceptable invasions of privacy and public health harms. The Children and Teens' Online Privacy Protection Act (COPPA 2.0) is urgently needed to provide crucial safeguards and to update federal protections that were initially established almost 25 years ago. We commend Rep. Walberg (R-Mich.) and Rep. Castor (D-FL) for introducing the House COPPA 2.0 companion bill. The bill enjoys strong bipartisan support in the U.S. Senate. We urge Congress to promptly pass this legislation into law. Any delay in bringing HR 7890 to a vote would expose children, adolescents, and their families to greater harm. CDD strongly supports the Kids Online Safety Act (KOSA) and believes that children and teens require robust privacy safeguards and additional online protections. Social media platforms, such as Meta, TikTok, YouTube, and Snapchat, have prioritized their financial interests over the well-being of young users for too long. These companies should be held accountable for the safety of America's youth and take measures to prevent harms like eating disorders, violence, substance abuse, sexual exploitation, addiction-like behaviors, and the exploitation of privacy. We applaud the efforts of Reps. Gus Bilirakis (R-FL), Kathy Castor (D-FL), Erin Houchin (R-IN), and Kim Schrier (D-WA), on the introduction of KOSA. The Senate has shown overwhelming bipartisan support for this legislation, and we urge the House to vote on KOSA, adopt the Senate's knowledge standard, and make the following amendments to ensure its effectiveness:- Extend the duty of care to all covered platforms, including video gaming companies, rather than just the largest ones.- Define the "duty of care" to cover "patterns of use that indicate or encourage addiction-like behaviors" rather than simply “compulsive usage”. This will ensure a broader scope that addresses more addiction-like behaviors.- Retain the consideration of financial harms within the duty of care.We believe these adjustments will improve the much-needed safety of young internet users. ### -

Press Release

Children’s Advocates Urge the Federal Trade Commission to Enact 21st Century Privacy Protections for Children

More than ten years since last review, organizations urge the FTC to update the Children’s Online Privacy Protection Act (COPPA)

FOR IMMEDIATE RELEASEContact:David Monahan, Fairplay: david@fairplayforkids.org Jeff Chester, Center for Digital Democracy: jeff@democraticmedia.org Children’s Advocates Urge the Federal Trade Commission toEnact 21st Century Privacy Protections for Children More than ten years since last review, organizations urge the FTC to updatethe Children’s Online Privacy Protection Act (COPPA) WASHINGTON, DC — Tuesday, March 12, 2024 – A coalition of eleven leading health, privacy, consumer protection, and child rights groups has filed comments at the Federal Trade Commission (FTC) offering a digital roadmap for stronger safeguards while also supporting many of the agency’s key proposals for updating its regulations implementing the bipartisan Children’s Online Privacy Protection Act (COPPA). Comments submitted by Fairplay, the Center for Digital Democracy, the American Academy of Pediatrics, and other advocacy groups supported many of the changes the commission proposed in its Notice of Proposed Rulemaking issued in December 2023. The groups, however, told the FTC that a range of additional protections are required to address the “Big Data” and Artificial Intelligence (AI) driven commercial surveillance marketplace operating today, where children and their data are a highly prized and sought after target across online platforms and applications. “The ever-expanding system of commercial surveillance marketing online that continually tracks and targets children must be reined in now,” said Katharina Kopp, Deputy Director, Director of Policy, Center for Digital Democracy. “The FTC and children’s advocates have offered a digital roadmap to ensure that data gathered by children have the much-needed safeguards. With kids being a key and highly lucrative target desired by platforms, advertisers, and marketers, and with growing invasive tactics such as AI used to manipulate them, we call on the FTC to enact 21st-century rules that place the privacy and well-being of children first.” “In a world where streaming and gaming, AI-powered chatbots, and ed tech in classrooms are exploding, children's online privacy is as important as ever,” said Haley Hinkle, Policy Counsel, Fairplay. “Fairplay and its partners support the FTC's efforts to ensure the COPPA Rule meets kids' and families' needs, and we lay out the ways in which the Rule addresses current industry practices. COPPA is a critical tool for keeping Big Tech in check, and we urge the Commission to adopt the strongest possible Rule in order to protect children into the next decade of technological advancement.” While generally supporting the commission’s proposal that provides parents or caregivers greater control over a child’s data collection via their consent, the groups told the commission that a number of improvements and clarifications are required to ensure that privacy protections for a child’s data are effectively implemented. They include issues such as: ● The emerging risks posed to children by AI-powered chatbots and biometric data collection.● The need to apply COPPA’s data minimization requirements to data collection, use, and retention to reduce the amount of children’s data in the audience economy and to limit targeted marketing.● The applicability of the Rule’s provisions – including notice and the separate parental consent for collection and disclosure consent and data minimization requirements – to the vast networks of third parties that claim to share children’s data in privacy safe ways, including “clean rooms”, but still utilize young users’ personal information for marketing.● The threats posed to children by ed tech platforms and the necessity of strict limitations on any use authorized by schools.● The need for clear notice, security program, and privacy program requirements in order to effectively realize COPPA’s limitations on the collection, use, and sharing of personal information. The 11 organizations that signed on to the comments are: the Center for Digital Democracy (CDD); Fairplay; American Academy of Pediatrics; Berkeley Media Studies Group; Children and Screens: Institute of Digital Media and Child Development; Consumer Federation of America; Center for Humane Technology; Eating Disorders Coalition for Research, Policy, & Action; Issue One; Parents Television and Media Council; and U.S. PIRG. ### -

Press Release

Press Statement - CDD supports revisions to Kids Online Safety Act (KOSA) that now has majority of Senate backing

Washington, DC February 15, 2024For too long social media platforms have prioritized their financial interests over the well-being of young users. Meta, TikTok, YouTube, Snap and other companies should be held accountable for the safety of America's youth. They must be required to prevent harms to kids—such as eating disorders, violence, substance abuse, sexual exploitation, and the exploitation of their privacy. The Kids Online Safety Act (KOSA) would require platforms to implement the most protective privacy and safety settings by default. It would help prevent the countless tragic results experienced by too many children and their parents. We are in support of the updated language of the Kids Online Safety Act and urge Congress to pass the bill promptly. Katharina Kopp, Ph.D.Director of Policy, Center for Digital Democracy -

Press Release

Press Statement - CDD supports update to Children and Teens’ Online Privacy Protection Act (COPPA 2.0) and urges Congress to adopt these stronger privacy safeguards

Washington, DC February 15, 2024Digital marketers are unleashing a powerful and pervasive set of unfair and manipulative tactics to target and exploit children and teens. Wherever they go online— social media, viewing videos, listening to music, or playing games—they are stealthily “accompanied” by an array of marketing practices designed to profile and manipulate them. The Children and Teens’ Online Privacy Protection Act (COPPA 2.0) will provide urgently needed online privacy safeguards for children and teens and update legislation first enacted nearly 25 years ago. The proposed new law will deliver real accountability to the digital media as well as help limit harms now experienced by children and teens online. For example, by stopping data targeted ads to young people under 16, the endless stream of information harvested by online companies will be significantly reduced. Other safeguards will limit the collection of personal information for other purposes. COPPA 2.0 will also extend the original COPPA law protections for youth from 12 to 16 years of age. The proposed law also provides the ability to delete children’s and teen’s data easily. Young people will also be better protected from the myriad of methods used to profile them that has unleashed numerous discriminatory and other harmful practices. An updated knowledge standard will make this legislation easier to enforce.We welcome the bipartisan updated text from co-sponsors Sen. Markey and Sen. Cassidy and new co-sponsors Chair Sen. Cantwell (D-WA) and Ranking Member Sen. Cruz (R-Texas). Katharina Kopp, Ph.D.Director of Policy, Center for Digital Democracy -

Press Release

Leading Advocates for Children Applaud FTC Update of COPPA Rule

Fairplay and the Center for Digital Democracy see a crucial step toward creating safer online experiences for kids

Contact:David Monahan, Fairplay, david@fairplayforkids.org Jeff Chester, CDD, jeff@democraticmedia.org Leading Advocates for Children Applaud FTC Update of COPPA RuleFairplay and the Center for Digital Democracy see a crucial step toward creating safer online experiences for kids WASHINGTON, DC — December 20, 2023—The Federal Trade Commission has proposed its first update of rules protecting children’s privacy in a decade. Under the bipartisan Children’s Online Privacy Protection Act of 1998 (COPPA), children under 13 currently have a set of core safeguards designed to control and limit how their data can be gathered and used. The COPPA Rules were last revised in 2012, and today’s proposed changes offer new protections to ensure young people can go online without losing control over their personal information. These new rules would help create a safer, more secure, and healthier online environment for them and their families—precisely at a time when they face growing threats to their wellbeing and safety. The provisions offered today are especially needed to address the emerging methods used by platforms and other digital marketers who target children to collect their data, including through the growing use of AI and other techniques. Haley Hinkle, Policy Counsel, Fairplay:“The FTC’s recent COPPA enforcement actions against Epic Games, Amazon, Microsoft, and Meta demonstrated that Big Tech does not have carte blanche with kids’ data. With this critical rule update, the FTC has further delineated what companies must do to minimize data collection and retention and ensure they are not profiting off of children’s information at the expense of their privacy and wellbeing. Anyone who believes that children deserve to explore and play online without being tracked and manipulated should support this update.” Katharina Kopp, Ph.D., Director of Policy, Center for Digital Democracy:“Children face growing threats as platforms, streaming and gaming companies, and other marketers pursue them for their data, attention, and profits. Today’s FTC’s proposed COPPA rule update provides urgently needed online safeguards to help stem the tidal wave of personal information gathered on kids. The commission’s plan will limit data uses involving children and help prevent companies from exploiting their information. These rules will also protect young people from being targeted through the increasing use of AI, which now further fuels data collection efforts. Young people 12 and under deserve a digital environment that is designed to be safer for them and that fosters their health and well-being. With this proposal, we should soon see less online manipulation, purposeful addictive design, and fewer discriminatory marketing practices.” ### -

The insatiable quest to acquire more data has long been a force behind corporate mergers in the US—including the proposed combination of supermarket giants Albertsons and Kroger. Both grocery chains have amassed a powerful set of internal “Big Data” digital marketing assets, accompanied by alliances with data brokers, “identity” management firms, advertisers, streaming video networks, and social media platforms. Albertsons and Kroger are leaders in one of the fastest-growing sectors in the online surveillance economy—called “retail media.” Expected to generate $85 billion in ad spending in the US by 2026, and with the success of Amazon as a model, there is a new digital “gold rush” by retailers to cash in on all the loyalty programs, sales information, and other growing ways to target their customers.Albertsons, Kroger, and other retailers including Walmart, CVS, Dollar General and Target find themselves in an enviable position in what’s being called the “post-cookie” era. As digital marketing abandons traditional user-tracking technologies, especially third-party cookies, in order to address privacy regulations, leading advertisers and platforms are lining up to access consumer information they believe comes with less regulatory risk. Supermarkets, drug stores, retailers and video streaming networks have massive amounts of so-called “first-party” authenticated data on consumers, which they claim comes with consent to use for online marketing. That’s why retail media networks operated by Kroger and others, as well as data harvested from streaming companies, are among the hottest commodities in today’s commercial surveillance economy. It’s not surprising that Albertsons and Kroger now have digital marketing partnerships with companies like Disney, Comcast/NBCUniversal, Google and Meta—to name just a few.The Federal Trade Commission (FTC) is currently reviewing this deal, which is a test case of how well antitrust regulators address the dominant role that data and the affordances of digital marketing play in the marketplace. The “Big Data” digital marketing era has upended many traditional marketplace structures; consolidation is accompanied by a string of deals that further coalesces power to incumbents and their allies. What’s called “collaboration”—in which multiple parties work together to extend individual and collective data capabilities—is now a key feature operating across the broader online economy, and is central to the Kroger/Albertsons transaction. Antitrust law has thus far failed to address one of the glaring threats arising from so many mergers today—their impact on privacy, consumer protection, and diversity of media ownership. Consider all the transactions that the FTC and Department of Justice have allowed in recent years, such as the scores of Google and Facebook acquisitions, and what deleterious impact they had on competition, data protection, and other societal outcomes.Under Chair Lina Khan, the FTC has awakened from what I have called its long “digital slumber,” moving to the forefront in challenging proposed mergers and working to develop more effective privacy safeguards. My organization told the commission that addressing the current role data-driven marketing plays in the Albertsons and Kroger merger, and how consolidating the two digital operations is really central to the two companies’ goals for the deal, must be part of its antitrust case.Kroger has been at the forefront of understanding how the sales and marketing of groceries and other consumer products have to operate simultaneously in-store and online. It acquired a leading “retail, data science, insights and media” company in 2015—which it named 84.51° after its geo coordinates in Cincinnati. Today, 84.51° touts its capabilities to leverage “data from over 62 million households” in influencing consumer buying behavior “both in-store and online,” using “first party retail data from nearly 1 of 2 US households and more than two billion transactions.” Kroger’s retail media division—called “Precision Marketing”—draws on the prowess of 84.51° to sell a range of sophisticated data targeting opportunities for advertisers, including leading brands that stock its in-store and online shelves. For example, ads can be delivered to customers when they search for a product on the Kroger website or its app; when they view digital discount coupons; and when customers are visiting non-Kroger-owned sites.These initiatives have created a number of opportunities for Kroger to make money from data. Last year, Precision Marketing opened its “Private Marketplace” service that enables advertisers to access Kroger customers via targeting lists of what are known as “pre-optimized audiences” (groups of consumers who have been analyzed and identified as potential customers for various products). Like other retailers, Kroger has a data and ad deal with video streaming companies, including Disney and Roku. Its alliance with Disney enables it to take advantage of that entertainment company’s major data-marketing assets, including AI tools and the ability to target consumers using its “250 million user IDs.”Likewise, the Albertsons “Media Collective” division promises advertisers that its retail media “platform” connects them to “over 100 million consumers.” It offers similar marketing opportunities for grocery brands as Kroger, including targeting on its website, app and also when its customers are off-site. Albertsons has partnerships across the commercial surveillance advertising spectrum, including with Google, the Trade Desk, Pinterest, Criteo and Meta/Facebook. It also has a video streaming data alliance involving global advertising agency giant Omnicom that expands its reach with viewers of Comcast’s NBCUniversal division, as well as with Paramount and Warner Bros./Discovery.Both Kroger and Albertsons partner with many of the same powerful identity-data companies, including data-marketing and cross-platform leaders LiveRamp and the Trade Desk. Through these relationships, the two grocery chains are connected to a vast network of databrokers that provide ready access to customer health, financial, and geolocation information, for example. The two grocery chains also work with the same “retail data cloud” company that further extends their marketing impact. Further compounding the negative competitive and privacy threats from this deal is its role in providing ongoing “closed-loop” consumer tracking to better perfect the ways retailers and advertisers measure the effectiveness of their marketing. They know precisely what you bought, browsed and viewed—in store and at home.Antitrust NGOs, trade unions and state attorneys-general have sounded the alarm about the pending Albertsons/Kroger deal, including its impact on prices, worker rights and consumer access to services. As the FTC nears a decision point on this merger, it should make clear that such transactions, which undermine competition, privacy, and expand the country’s commercial surveillance apparatus, should not be permitted.This article was originally published by Tech Policy Press.